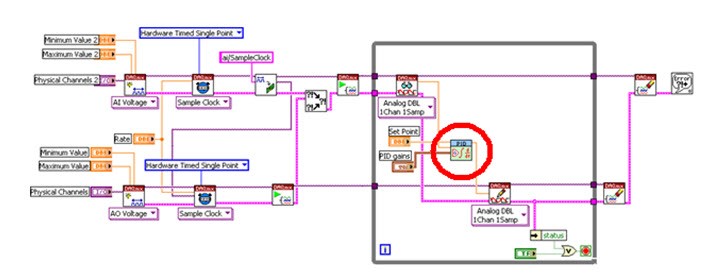

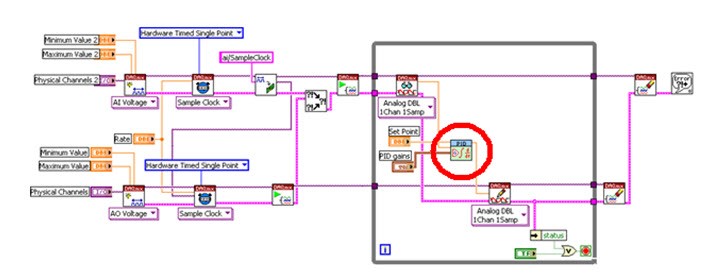

LABVIEW Vi for implementing a single channel PID controller:

https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z0000019QlFSAU&l=en-IN

Use queue and channel wires

Use QUEUE to communicate between analog read and analog write task

One portion of code is

creating (or producing) data to be processed (or consumed) by another

portion. The advantage of using a queue is that the producer and

consumer will run as parallel processes and their rates do not have to

be identical.

https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000P7OfSAK&l=en-IN

https://www.ni.com/en-in/support/documentation/supplemental/16/channel-wires.html

PID control labview example video:

https://www.youtube.com/watch?v=lyw_Ygeti3I&ab_channel=NIDevZone

https://www.youtube.com/watch?v=qMydcfZ_ZSs&ab_channel=FlexRIO

Thesis on PID control with labview

http://www.diva-portal.org/smash/get/diva2:757138/FULLTEXT02

https://www.ni.com/en-in/innovations/white-papers/06/pid-theory-explained.html

Simple on off control LABVIEW

|

| Basic on off control using labview |

|

|

Hardware timed PID control using shared sample clock and trigger

|

PID control with python and NIDAQMX

Edit on 15 Oct 2022

From https://forums.ni.com/t5/Multifunction-DAQ/Continuous-write-analog-voltage-NI-cDAQ-9178-with-callbacks/td-p/4036271?profile.language=en

1) Your code comments refer to wanting to be able to update output

signals at 100 Hz, presumably under software control. I assume this

means you want <= 10 msec latency between your software deciding on

an output value and having that output appear as a real world signal.

This is pretty tricky to accomplish with a buffered output task,

and (I suspect) likely impossible when the device is connected by USB or

Ethernet. You may need to approach this with an unbuffered,

software-timed, "on-demand" task and then live with the corresponding

irregularities and uncertainties of software timing

2) The rule of thumb wouldn't apply to situations where low latency is the

priority. A lot of typical data acq and signal generation apps don't

need real-time low latency. They generate pre-defined stimulus signals

and collect data for post-processing later. The rule of thumb works

well in those cases, allowing live displays with only *moderate*

latency, enough for an operator to see what's going on.

3) In the past when I experimented with trying to do low latency hw-clocked

AO, it wasn't trivial to get < 10 msec even with a PCIe device. I

recall that in order to get there, I needed to set up a few different

low-level and fairly advanced DAQmx properties to non-default values. I

wouldn't have expected the defaults for a cDAQ system over USB to

support that kind of usage so easily.

4)

Sample rate and samples per channel

Hardware timed single point